Local first AI assistant Kin leverages Turso's libSQL for on-device native Vector Search

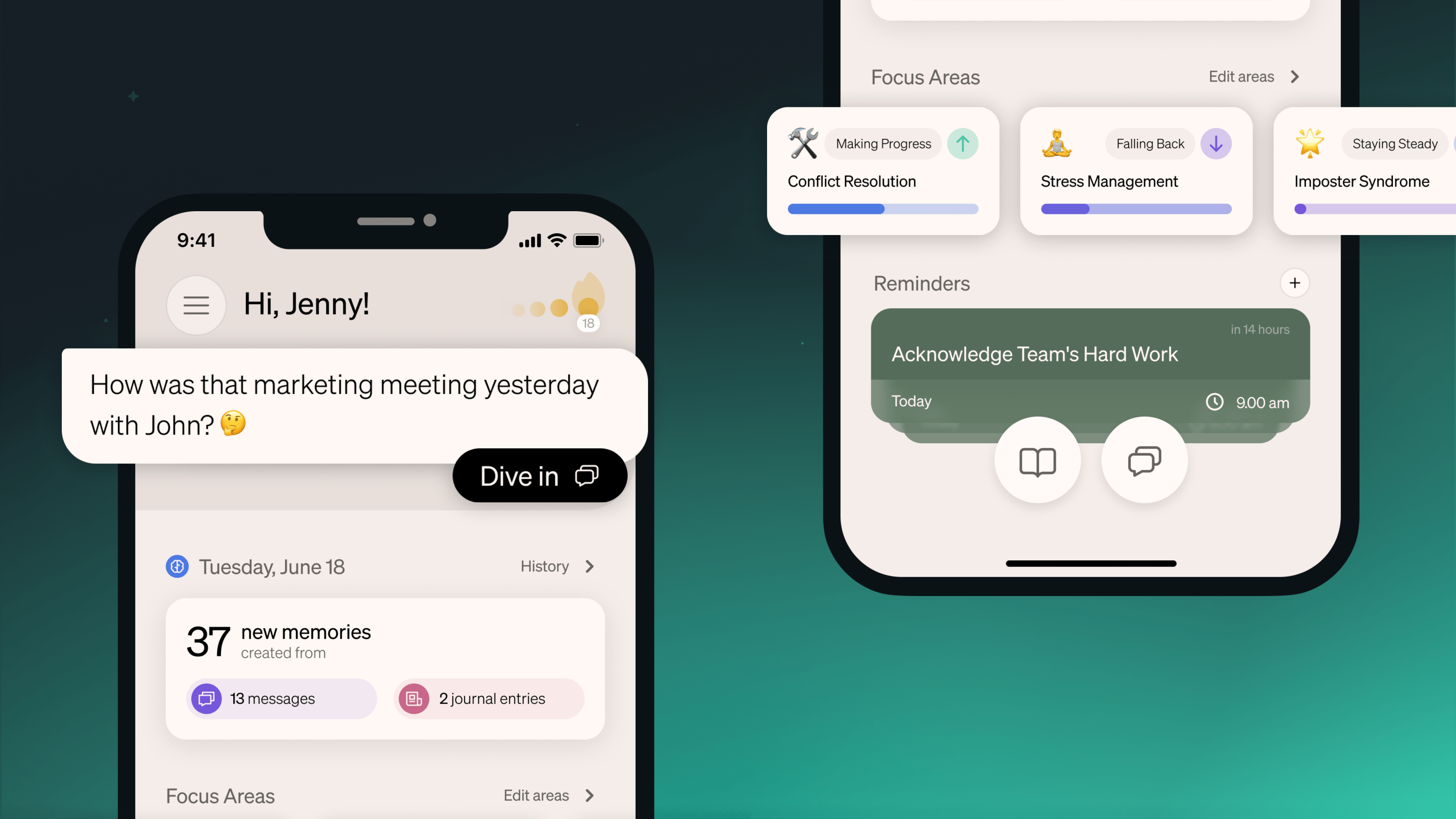

Kin is a personal AI for your work and personal lives. We think personal AI and AI companions are going to become a really big thing, and we’ve tried to build a combination between a friend, a coach and a mentor. It’s available 24 hours a day 7 days a week to support you when you need to release stress or if you have a serious problem you want to get input on.

To make this possible, we’re really focused on understanding the user and building Kin’s long-term memory. We use NLP techniques to extract structured information from the user’s input, mapping out relationships and timelines of the users life, and the more you use the AI, the better it gets.

But the foundation of every relationship is trust. Two years ago when we began this journey, we decided to be very focused on privacy and helping users take control of the data so that they’re in the driver’s seat. In order for AI to be more than just transactional, it required trust.

#Local first for trust

That’s why we built Kin as a local first mobile app that runs as much as possible on-device. Doing machine learning on-device also allows us to take advantage of all the AI smarts in the new generations of mobile chips, which in turn requires the data to be local.

All of your data lives on-device, we don’t even have a cloud to host it. So we can’t be hacked to get your data. It’s all on your device, in Turso-powered SQLite compatible database files.

We also perform local vector search on the database, because of Turso’s open contribution fork of SQLite, libSQL, that supports native vector embeddings as a datatype.

That also means we can do Retrieval Augmentation Generation (RAG) on device.

All this adds up to a superior, snappier user experience, because you’re not as reliant on cloud servers and network latency.

#Why Turso?

We explored a lot of database options. We found one we thought was the right fit for us, with a really cool graph engine that supports vector embeddings and transactional stuff, written in Rust. We got it running in React and spent a long time trying to get it production ready, but it was ultimately not performant enough to be viable.

So we went back to tried and true SQLite, using a plug in for vector search, but it was just too buggy for production, and it also forced us into non efficient usage patterns for SQLite.

That’s when we found libSQL by Turso. It essentially was SQLite, with native vector embeddings added. It ran efficiently and scaled effortlessly, just like SQLite should.

And it meant that we only needed one on-device database for everything. It would be very challenging to try to use two separate databases, including a dedicated vector database, on a phone. You have to make it fault tolerant in a very resource constrained environment. And it makes it so much easier when everything is inside the same transaction - especially when there’s zero network lag because it’s all local.

#The future

On the Turso side, we’re excited about the future of libSQL and the Turso platform. And we can’t wait for you to try out Kin - please download the app and let us know what you think. We have carefully considered this product and we think you’ll love your new personal AI companion that lives on your device and knows you well.