Offline data access: a dream come true?

What would you do, if suddenly you could not access the internet? Many Canadians recently found out the hard way, when the Rogers outage took down almost a third of the country for an entire day. I…

What would you do, if suddenly you could not access the internet? Many Canadians recently found out the hard way, when the Rogers outage took down almost a third of the country for an entire day. I myself, felt like I was back in the stone age.

Although day-long outages are rare, people go on subways, take flights, and do many other things during which time connectivity is spotty at best. And even if your connection works perfectly, any network round trips add latency to your applications.

#Consistency models for distributed systems

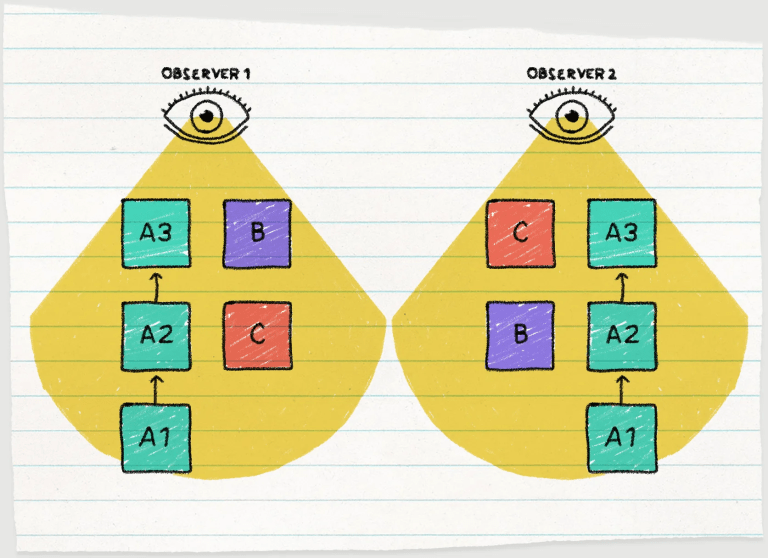

In distributed systems speak, the guarantees provided by the system when two locations try to change the same piece of data is broadly referred to as the consistency model. As with many things in life, consistency is a spectrum

#Strong consistency

On the one side of that spectrum there is strong consistency: this model provides the illusion that there is a single global copy of the data that all users share. All updates are seen by everyone, and changes are immediately visible. This is the easiest model to reason about, but as the recent Canadian experience demonstrated, when the True North Strong and Free got disconnected, it quickly became the True North Strong and… screwed: strong consistency means nothing can get done without talking to the network, sacrificing latency, and availability.

Strong consistency is the model adopted by many established databases like Postgres, MySQL, and also by other higher level data platforms like ChiselStrike.

#Eventual Consistency

On the other end of the spectrum there is eventual consistency. Eventual consistency allows parts of the network to operate independently, and guarantees that at some point in the future, under certain circumstances, data will converge. I have a friend that asked me for some money 10 years ago, saying he'd pay it back eventually. It's been 10 years, but because he said eventually, technically he didn't default yet.

In practice, eventual consistency is not quite as bad for applications, but the consistency model does push complexity to the applications, which now need to resort to various tricks to avoid eventual consistency overflowing to the user.

#What's in the middle?

In the middle of that spectrum, there is causal consistency. Broadly speaking, causal consistency means that operations that follow each other in a causal chain are seen by everybody in the same order. But independent operations can be shuffled around and be seen by different parts of the network in any order.

To make the model practical for applications, one can move slightly towards strong consistency by augmenting causal consistency with convergent conflict handling, also known as Causal+ model: when conflicts do happen, they are resolved using the same method in all replicas.

For example, last writer wins. A popular method for providing convergent conflict handling are CRDTs (Conflict-free Replicated Data Types), which are a data structure that allow updating two replicas without coordination (which allows updates to happen even if network is down) and ensures that when two replicas see the same set of updates, they converge to the same state.

#Replicache: offline first that just makes sense

There is a growing movement around the idea that before even trying to reach the network, things should happen in-browser. Updates can be done locally, and large parts of the dataset — if not the full thing, are present in the client itself. You don't get faster than that, since there is no more network round trips, and should your connection be interrupted, you may not even notice. (although in our case in Canada, I am pretty sure that after some hours… we'd notice).

Leading the offline-first movement, is Replicache.

When I first saw Replicache, a network partition of sorts immediately formed: one between my upper jaw and my lower jaw, that immediately reached for the floor. “Wow, this is COOL”.

Replicache shares many insights with us at ChiselStrike: in particular, lots of modern application works with surprisingly little data.

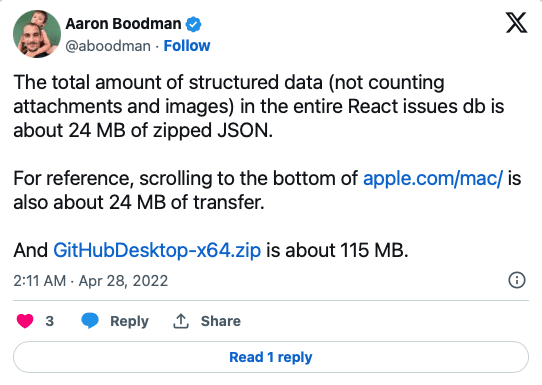

As Aaron (creator of Replicache) points out, the entire GitHub issues database for React — which is not exactly your weekend hobbyist project, fits in 24MB. That means the whole thing can be downloaded in less than a second if need be, from which point everything can happen offline, with CRDTs making sure that even updates are allowed.

At a very high-level, the way Replicache works is that you first construct a client object where you specify two URLs: a push URL (for writes) and a pull URL (for reads):

import {Replicache} from "replicache";

const rep = new Replicache({

pushURL: '/api/replicache-push',

pullURL: '/api/replicache-pull',

...

});

You then use the client object, for example, with useSubscribefrom the replicache-react in your React application code as follows:

const todos = useSubscribe(rep, async tx => {

return await tx.scan({prefix: 'todo/'}).toArray();

});

return (

<ul>

{todos.map(todo => (

<li key={todo.id}>{todo.text}</li>

))}

</ul>

);

Replicache deals with all the logic of pushing and pulling to the backend under the hood, providing extreme low latency and freeing the developer from worrying about all the details of network going down.

#ChiselStrike and Replicache: a match made in heaven?

Replicache is a bring-your-own-backend solution, so to take advantage of that you can do anything you want, with any interface. And if you want a one-stop-shop for all of your backend needs that can transparently host your data layer and business logic, and be with you all the way through your journey from prototype to production, you can use ChiselStrike.

Most likely, applications need a mix of both. If you are handling payments, for example, you would hope that the payments are strongly consistent. Users would expect that too, so wouldn't mind waiting on a loading bar. But the payment history can be offline first.

With the REST endpoints provided by ChiselStrike, you can have guarantees of strong consistency when it matters, and yet easily take advantage of their causal+ consistency model to keep your application working offline.

#The best backend for replicache?

But you must be thinking… many things out there follow strong consistency. What makes ChiselStrike particularly appealing?

First, although you can deploy ChiselStrike to our infrastructure, ChiselStrike has a fully offline mode that allows you to develop locally on your laptop, including embedded databases.

But it also abstracts away the data layer altogether. You can define entities in pure Typescript without setting up databases, migrations, or even worrying about queries at all. You can also add the Replicache business logic straight into the endpoint, and not pay many roundtrips to fetch all the data that is needed.

The result is a REST endpoint that can then be accessed as an independent (strongly consistent API), or easily consumed by Replicache, with any business logic we may want attached to it.

If we look at the pull endpoint, for example, all you is to do is to define some entities (Client, Entry and Space), and then write a endpoints/replicache-pull.ts file with something like:

import { Client } from '../models/Client';

import { Entry } from '../models/Entry';

import { Space } from '../models/Space';

type UserValue =

| {

op: 'del';

key: string;

}

| {

op: 'put';

key: string;

value: unknown;

};

function transformEntry(entry: Entry): UserValue {

if (entry.deleted) {

return { op: 'del', key: entry.key };

} else {

const value = JSON.parse(entry.value);

return { op: 'put', key: entry.key, value: value };

}

}

export default async function (req: Request): Promise<Response> {

const url = new URL(req.url);

const spaceID = url.searchParams.get('spaceID');

const pull = await req.json();

let requestCookie = pull.cookie ?? 0;

const clientID = pull.clientID;

const lastMutationID = (await Client.findOne({ clientID }))?.lastmutationid;

const responseCookie = (await Space.findOne({ spaceID }))?.version;

const patch = Entry.cursor()

.filter(

(entry) => entry.spaceid == spaceID && entry.version > requestCookie,

)

.map((x) => transformEntry(x));

return {

lastMutationID: lastMutationID ?? 0,

cookie: responseCookie ?? 0,

patch,

};

}

The response to Replicache contains an array of patch elements, which represent a mutation on the data. In this case, they're the todo entries. Deletions are represented with a del operation for conflict resolution purposes.

#Now what?

You may be reading this offline. Unfortunately GitHub and Discord still need a connection for most things, so when you're back online, drop us a note on GitHub, and join our Discord channel to keep in touch. We'd love to hear what kind of cool offline first applications you'll build with Replicache and ChiselStrike!