Replicate my *entire* production database? You must be mad!

Database edge replication is a great strategy for reducing query latency, until it costs too much. Here's what you can do to fix that.

If you've been following Turso, you know that we're excited about bringing SQLite to the edge, with low latency queries around the world. We achieve this by giving you the ability to replicate your database to a number of locations you choose, ideally geographically close to the code that performs the queries.

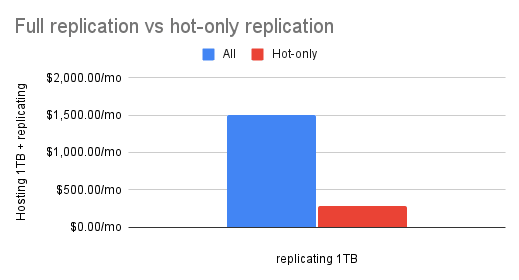

This is all good, and you can see for yourself what those latencies look like, but we'd be dishonest if we told you that full database replication is a great strategy for all application databases. One very real issue you might run into is the overall cost of replication. It stands to reason that your costs will go up for each new replica you create — after all, storage is not free. For newer projects with smaller initial data sets, replication won't break the bank, but as your app data grows in production, costs can quickly accelerate. Here's an example:

Let's say you start with 4GB of data with a per-GB cost of $.25/month. That's $1/month stored in one location. Now, to boost query speeds, you want to replicate that to all 6 habitable continents — that's $6/month. Not bad. But imagine one year later when you've scaled up to 1TB of production data all replicated in those 6 locations. That's now a bill of $1500/month (compared to the $250 in the original single location). Each additional location multiplies the cost up by another 1x! While all that data will be very fast to query, it might not be worth the cost.

#So what do we do about accelerating replication costs?

One thing we encourage developers to think about is which data is the most “hot” in their system. By that, I mean the tables that are most frequently read, and have the biggest overall impact on the perceived performance of the app. Once you identify the hot and cold data, you can split those into different databases, and set them up to individually replicate according to the benefit that you expect to see in production, with the hot data gaining more replicas. It's all about trading off the cost of replication with its benefit.

In essence, you are figuring out which tables you would like to behave as if they were cached on the edge (similar to static content in a CDN), then paying the cost of making queries on that data extra fast.

From the previous example, what if you knew that only 4GB of the 1TB of production data is hot? Instead of replicating the full 1TB in all 6 locations, you break it down like this:

- Keep the full data in a single location, costing $250/month.

- Replicate the 4GB of hot data to 6 locations. On the Turso Scaler plan at $29/month, you get 6 locations and 24GB of data. This means that hot replicated 4GB fits nicely in all 6 locations.

The total monthly storage cost in this scenario is $250+$29=$279/month. Compared to the $1500/month of replicating the entire 1TB, this is a total reduction of about 500% in your monthly bill.

If you're working on a greenfield project and are able to choose Turso from the start, configuring your replicas is an exceedingly simple task. Just run turso db replicate for each new replica you want (within the limits of your billing plan), then enjoy the lower latency from your co-located code.

But if you've already started with a different database service that doesn't do replication, it's not so easy! We recognize that Postgres is ubiquitous — a real workhorse for the web — and you're not likely to migrate away if it's already serving you well. And that's fine. We can work with that.

#Replicate your Postgres database to Turso with pg_turso

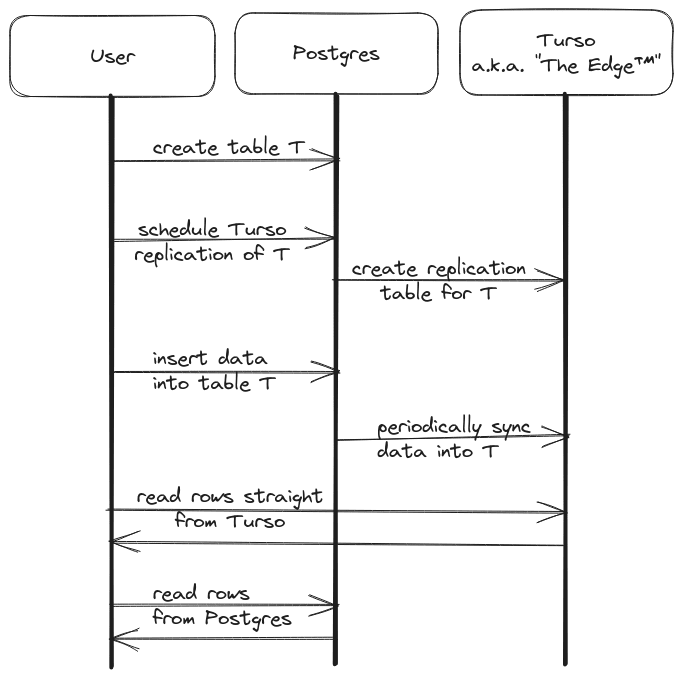

If you're already working with Postgres, and want to apply the above strategy of replicating certain hot tables for global low latency queries, we have a solution for that. pg_turso is a Postgres extension that enables you to choose tables or materialized views for replication to Turso. It's important to note that while using pg_turso, the data in Turso is read-only, much like CDN. Updates to the data must happen at Postgres. Let's see how this works.

You first choose a table (or a materialized view) in Postgres. Tables often are already a subset of your data, and materialized views are a standard way to select a part of your data for special queries, so we hook into that. The extension taps into the logical replication mechanism within Postgres, and then makes sure to sync the Turso database, periodically pushing updates.

#Example: Splicing food data

To show a real example, imagine a table with the following schema, used to track and rate food users tried:

CREATE TABLE food_stats (

user_id int,

cuisine text,

food_item text,

food_quality_index float,

food_avg_price_usd float

);

Let's tentatively load the table with some data:

INSERT INTO food_stats VALUES

(0, 'US', 'gas station sushi', 10.0, 5.00),

(1, 'US', 'dishwasher salmon', 0.0, 4.00),

(2, 'US', 'carolina pulled pork', 9.5, 9.50),

(3, 'SE', 'Surströmming', 1.0, 2.99),

(4, 'FI', 'salmiakki', 6.0, 1.99),

(5, 'PL', 'żurek', 8.0, 1.29)

;

We may decide that it doesn't make sense to replicate data about food that people are unlikely to eat. We can accomplish that by creating a materialized view for storing entries with a quality index equal or higher than 8:

CREATE MATERIALIZED VIEW good_food_stats AS

SELECT user_id,

food_item,

food_quality_index,

food_avg_price_usd

FROM food_stats

WHERE food_quality_index >= 8.0;

Now that we have a materialized view in Postgres, let's schedule its replication to the edge.

In order to install the pg_turso extension, follow our guidelines.

Once pg_turso is set up, we're ready to schedule the replication with just a few SQL statements:

1. Load the pg_turso extension:

CREATE EXTENSION pg_turso;

2. Set up Turso authentication by creating two Postgres functions — one for the URL, another for your access token:

CREATE FUNCTION turso_url() RETURNS TEXT LANGUAGE SQL AS

$$ SELECT 'https://your-db-link-here.turso.io'; $$;

CREATE FUNCTION turso_token() RETURNS TEXT LANGUAGE SQL AS

$$ SELECT 'your-token-here'; $$;

3. Schedule the replication by specifying the view to replicate and the refresh interval:

SELECT turso_schedule_mv_replication('good_food_stats', '5 seconds');

That's it! pg_turso's materialized view replication is based on pg_cron, and turso_schedule_mv_replication function returns a pg_cron handle you can later use to unschedule the replication if needed.

After a few moments, you can enjoy the freshly replicated data right in your Turso database. The schema was migrated automatically, and all rows were transferred straight to the edge.

$ turso db shell pgturso-example

→ select * from good_food_stats;

USER ID FOOD ITEM FOOD QUALITY INDEX FOOD AVG PRICE USD

0 gas station sushi 10.0 5.00

2 carolina pulled pork 9.5 9.50

5 żurek 8 1.29

Besides materialized views, pg_turso is also perfectly capable of replicating whole tables. You can use the turso_schedule_table_replication function to make an entire table available at the edge:

SELECT turso_schedule_table_replication('food_stats', '30 seconds');

#Summary

For an edge architecture, replicating all of your data everywhere works, but it can become expensive. The edge is great for data that has to be accessed fast, close to your users. You can save money by identifying which data is expected to be hot, and replicate just that.

For users that already have a Postgres deployment, tables and materialized views are a natural way to partition your data. For those use cases, pg_turso allows you to periodically sync tables and materialized views to the Edge. Note that pg_turso is currently experimental, and we would love your feedback on it, and know how you would like to use it for your product.

If you want to put this kind of edge architecture in motion, you can sign up for Turso now, and start syncing your Postgres data today with pg_turso.