Microsecond-level SQL query latency with libSQL local replicas

The fastest access to your database is when your database is embedded in your application. However, most contemporary databases such as PostgreSQL and MySQL use a client-server model where the client…

The fastest access to your database is when your database is embedded in your application. However, most contemporary databases such as PostgreSQL and MySQL use a client-server model where the client executes SQL remotely over a network write protocol. But there's one database engine that's built different: SQLite. It implements a full-blown SQL relational database engine that you can just drop into your application for microsecond-level SQL queries.

Unfortunately, SQLite is really built around the concept of a local filesystem, which makes it hard to build applications that access a shared database. That's why when we started working towards making SQLite easier to use for applications, we built a server in our fork, libSQL, that allows remote SQL execution over HTTP, much like what you see with other database such as Neon or PlanetScale. The server approach is great for serverless applications as well, because the serverless platforms such as like Cloudflare Workers or AWS Lambda don't have enough persistent state to bring over SQLite.

But there is another way to make SQLite work for applications that need a shared database: replication. LiteFS is a popular SQLite replication sidecar that works via a special user space filesystem that let's applications just use SQLite, with LiteFS dealing with replication in the background. But since remote SQL execution with HTTP is important for serverless, it would be awesome to have both options.

So how does it work?

First, if you're working with SQLite you might have a database with a users table with two columns, name and email:

$ sqlite3 hello.db

SQLite version 3.42.0 2023-05-16 12:36:15

Enter ".help" for usage hints.

sqlite> CREATE TABLE users (name TEXT, email TEXT);

sqlite> INSERT INTO users VALUES ('Pekka Enberg', 'penberg@iki.fi');

To access the local database file, you can use a new libsql Rust crate as follows:

use libsql::{Database, params};

fn main() -> anyhow::Result<()> {

// Path to the database file.

let db_file = "hello.db";

// Open the database file.

let db = Database::open(db_file)?;

// Create a connection to the database.

let conn = db.connect()?;

// Prepare a SQL statement for execution.

let query = conn.prepare("SELECT name, email FROM users")?;

// Execute the prepared SQL statement.

let rows = query.query(¶ms![])?;

// Fetch the first row from the result set.

let row = rows.next()?.unwrap();

// Extract columns from the row...

let name = row.get::<&str>(0).unwrap();

let email = row.get::<&str>(1).unwrap();

// ...and print them out.

println!("Name: {}, email: {}", name, email);

Ok(())

}

If you run the program, you can see the first row of the users table printed out:

penberg@vonneumann libsql % cargo run

Finished dev [unoptimized + debuginfo] target(s) in 3.79s

Running `/Users/penberg/src/libsql/libsql-examples/rust/target/debug/libsql-example`

Name: Pekka Enberg, email: penberg@iki.fi

If you are a Rust developer, the example program might look familiar to you. And that's because the API is inspired by rusqlite, a popular Rust API for SQLite. The same program using rusqlite would look as follows:

use rusqlite::Connection;

fn main() -> anyhow::Result<()> {

let conn = Connection::open("hello.db")?;

let mut query = conn.prepare("SELECT name, email FROM users")?;

let mut rows = query.query([])?;

let row = rows.next()?.unwrap();

let name: String = row.get(0).unwrap();

let email: String = row.get(1).unwrap();

println!("Name: {}, email: {}", name, email);

Ok(())

}

So far so good, but how does the libsql crate provide replication?

Well, first we need the libSQL server running:

penberg@vonneumann sqld % cargo run

Finished dev [unoptimized + debuginfo] target(s) in 0.91s

Running `target/debug/sqld`

_ _

___ __ _| | __| |

/ __|/ _` | |/ _` |

\__ \ (_| | | (_| |

|___/\__, |_|\__,_|

|_|

Welcome to sqld!

version: 0.17.2

commit SHA: be9a6efc48948179427fcbee4d4a8e391a943ac6

build date: 2023-08-08

This software is in BETA version.

If you encounter any bug, please open an issue at https://github.com/libsql/sqld/issues

config:

- mode: standalone

- database path: data.sqld

- extensions path: <disabled>

- listening for HTTP requests on: 127.0.0.1:8080

- grpc_tls: no

2023-08-08T12:27:58.227665Z WARN sqld: No authentication specified, the server will not require authentication

2023-08-08T12:27:58.228429Z INFO sqld::http: listening for HTTP requests on 127.0.0.1:8080

2023-08-08T12:27:58.228456Z WARN sqld: No server heartbeat configured

Then we need to create the schema and insert data into into like we did with plain SQLite.

(We're going to just borrow the Turso CLI for this because sqld does not ship with a shell yet.)

penberg@vonneumann ~ % turso db shell http://localhost:8080

Connected to http://localhost:8080

Welcome to Turso SQL shell!

Type ".quit" to exit the shell and ".help" to list all available commands.

→ CREATE TABLE users (name TEXT, email TEXT);

→ INSERT INTO users VALUES ('Pekka Enberg', 'penberg@iki.fi');

We can just switch our simple program to synchronize the database file from the server by changing the code to:

use libsql::{Database, Opts, params};

#[tokio::main]

async fn main() -> anyhow::Result<()> {

let db_file = "hello.db";

let opts = Opts::with_http_sync("http://localhost:8080".to_owned());

let db = Database::open_with_opts(db_file, opts).await?;

let conn = db.connect()?;

db.sync().await?;

let query = conn.prepare("SELECT name, email FROM users")?;

let rows = query.query(¶ms![])?;

let row = rows.next()?.unwrap();

let name = row.get::<&str>(0).unwrap();

let email = row.get::<&str>(1).unwrap();

println!("Name: {}, email: {}", name, email);

Ok(())

}

And now if we run the program, the libsql crate will synchronize its database file (that starts out as empty) from the server and perform SQL queries locally:

penberg@vonneumann libsql-sync % cargo run

Updating git repository `https://github.com/libsql/libsql/`

Compiling libsql-sync-example v0.1.0 (/Users/penberg/src/libsql/libsql-examples/rust/libsql-sync)

Finished dev [unoptimized + debuginfo] target(s) in 2.43s

Running `/Users/penberg/src/libsql/libsql-examples/rust/target/debug/libsql-sync-example`

Name: Pekka Enberg, email: penberg@iki.fi

It's not just Rust either. We're building language bindings for JavaScript, Python, and Go on top of the libsql Rust crate. For example, with JavaScript you can do the following to synchronize your database file and query locally:

import Database from 'libsql-experimental';

const options = { syncUrl: 'http://localhost:8080' };

const db = new Database('hello.db', options);

db.sync();

const row = db.prepare('SELECT * FROM users WHERE id = ?').get(1);

console.log(`Name: ${row.name}, email: ${row.email}`);

(If you're a JavaScript developer and that looks familiar to you, that's because it's inspired by better-sqlite3, although we're switching to sqlite-async to support write delegation later.)

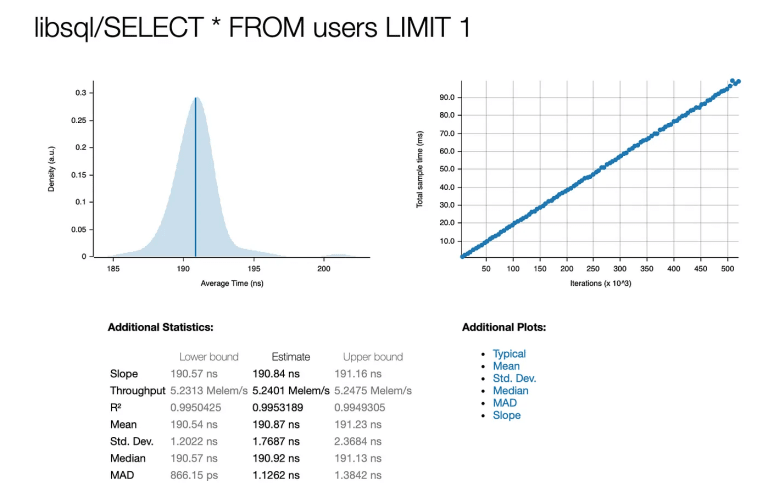

So how fast is it? Well, as fast as SQLite. The micro-benchmarks in the libsql crate, for example, show that with prepared statements, you can perform a SQL query in just 200 nanoseconds:

penberg@vonneumann core % cargo bench

Running benches/benchmark.rs (/Users/penberg/src/libsql/libsql/crates/target/release/deps/benchmark-a95c7258e88dbd24)

Gnuplot not found, using plotters backend

libsql/SELECT * FROM users LIMIT 1

time: [190.57 ns 190.84 ns 191.16 ns]

thrpt: [5.2313 Melem/s 5.2401 Melem/s 5.2475 Melem/s]

Found 6 outliers among 100 measurements (6.00%)

2 (2.00%) low mild

3 (3.00%) high mild

1 (1.00%) high severe

libsql/SELECT * FROM users LIMIT 100

time: [190.76 ns 190.95 ns 191.13 ns]

thrpt: [5.2320 Melem/s 5.2370 Melem/s 5.2422 Melem/s]

Found 4 outliers among 100 measurements (4.00%)

2 (2.00%) high mild

2 (2.00%) high severe

If that sounds insanely fast, that's because SQLite is running like a bat out of hell and in the micro-benchmark it's really just reading from DRAM (well, really your CPU caches). For real use cases, you can still expect microsecond-level query latency, though.

There's still heaps of work to do. We want the libsql crate to be the main crate people consume for local database files, local replicas, but also remote SQL execution over HTTP. We essentially want people to be able to have their SQLite database wherever they need it, inside the application, on an edge replica, laptop, or wherever they're running their application and need their database. We'll also be obviously integrating this in the Turso CLI when things are more stable.