Why can't we be friends?

Some people call me old. But the ones who want something from me usually prefer terms like wise or experienced. I've been around for a while, working with systems-level technologies like the Linux…

Some people call me old. But the ones who want something from me usually prefer terms like wise or experienced. I've been around for a while, working with systems-level technologies like the Linux Kernel, and ScyllaDB, a high performance NoSQL database. Things that have been squarely considered backend technologies: the ugly stuff you don't need to see.

Although not widely known, in the beginning of my career I did something akin to “full-stack” development in modern terminology. In fact, that's pretty much what all of us did.

Back in the day, web development was simple: you configured a database, which was either Postgres or Mysql, wrote some PHP code and some HTML (Server-side rendering before it was cool). If you needed interactivity you added a sprinkle of Javascript, and… done. A single person could do it, and even if for a large project where lots of people would be involved, the skillset was not very specialized.

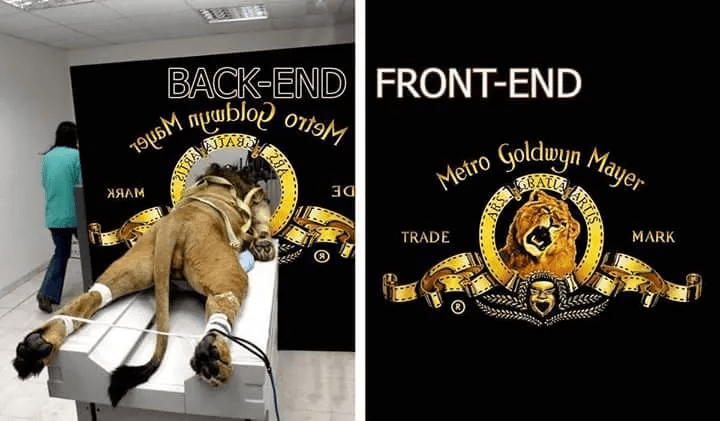

But somewhere along the road, things changed dramatically. As everything got more complex, specialization followed. And all of a sudden you were either a frontend or a backend developer. A schism formed, as if it was 1054 all over again.

And as it's common with a family that splits, those two groups grew more and more apart. That is visible in technologies like GraphQL: its whole premise revolves around “frontend developers can now move independently of backend developers”. Different skillsets, different tools, and especially different practices, keep those communities apart. Even with things like Node pushing Javascript to the server, you still need a data layer running somewhere else and operated by specialized people to make it useful. The big divide is still there.

Modern web development is an absolute marvel. You write your TypeScript, push it to a git repository, and a couple of seconds later it is just “there”. And by “there” I mean everywhere: through caching and CDNs, users will connect to the nearest location without worrying about servers, management, nodes, anything like that. Serverless functions are taking over the dynamic aspects of the web.

But backends are yet to catch up. They are heavy, complex, full of jargon and specialized knowledge. A lot of that comes from the belief that code is fast, dynamic, and cheap.It runs on CPUs and accesses memory, y'know, the fast stuff.

Storage is perceived to be slow, complex, and expensive. Even things like connection pooling to a database are hard to achieve from traditional serverless functions. If the access is going to be slow anyway, then why bother?

While that divide exists, the frontend-backend divide will persist. One will be dealing with data, storage, the slow stuff. While the other deals with functions, computation, the cool and fast stuff. But this is an outdated assumption. In light of recent advances in storage technology, it is time we revisit this.

What if code and data could be friends again? The past few years have witnessed a revolution bubbling up from the bottom of the stack. Storage got cheaper, and way faster. That is leading database technology to rethink age-old mantras like “do whatever computation you must to avoid going to storage”. Now often times CPU is the bottleneck.

Yes, really! don't believe me? Here's Linux developer Jens Axboe demonstrating a storage device doing 10 MILLION IOPS in a single core. It's the wild west out there.

That's what led us to create ChiselStrike: there's no need for code and data to be apart. They can be together, and once that happens, frontend and backend can be friends again too.

ChiselStrike is a Typescript execution engine with native data access capabilities. It's currently in developer preview — join the party here! It has a local development mode so you can experiment in your laptop while offline. And coming soon a managed service onto which you can git-push your code straight to the edge with the same experience that you're used to from providers like Vercel and Netlify. We are also proud to have their founders Guillermo Rauch (Vercel), Matt Biilman and Chris Bach (Netlify) as our early backers, alongside top-tier VC funds like Mango Capital, First Star and Essence.

import { ChiselEntity } from '@chiselstrike/api';

export class Person extends ChiselEntity {

name: string;

country: string;

}

And then if you want to use it to find all people in a specific country, you would write the following code:

import { Person } from "../models/Person";

import { responseFromJson } from "@chiselstrike/api"

export default async function (req: Request) {

const url = new URL(req.url);

const country = url.searchParams.get ("country") ?? undefined;

const response = await Person. findMany({country});

return responseFrom]son (response);

}

The result of that code is an HTTP endpoint that you can call from anywhere: your favorite framework, curlor even directly from the browser. It's just there. After feeding some initial data into the database, calling that endpoint yields the people in our database that are in Canada:

[

{

"id": "931eda2d-b2e0-456b-ba17-faa484101ff7",

"name": "Glauber",

"country": "Canada",

"id": "aa786905-0db7-40c7-a90f-1fd2b8007597",

"name": "Dejan",

"country":

}

]

One of the often touted advantages of GraphQL is that a frontend developer could move independently of the backend developer to get efficient endpoints without back-and-forth. Want only some of the fields in the expression? No problem!

Because ChiselStrike is a code-and-data platform, we can do that too. In the example below filtering happens at the database layer: the fields that we don't need are not even queried, and this query is as efficient as it gets. A big advantage of merging code and data.

import { Person } from "../models/Person";

import { responseFromJson } from "@chiselstrike/api";

export default async function (req: Request) {

const url = new URL(req.url);

const country = url.searchParams.get ("country") ?? undefined;

const response = await Person. cursor).filter({country}).select ("name"). toArray();

return responseFromJson (response);

}

The result:

[{ "name": "Glauber" }, { "name": "De jan" }]

There is a lot more that we can do with the code-and-data approach. Let's say for example that we now want to generate a yaml file from a template from the entries in the database. We now import the handlebars package, encode it to base64, and return it:

import * as handlebars from 'handlebars';

import { Person } from '../models/Person';

import { responseFromJson } from '@chiselstrike/api';

const template = `

name: {{ NAME }}

country: {{ COUNTRY }}

`;

function getFileContents(person: Partial<Person>): string {

let handleBars = handlebars.compile(template);

const perUser = { NAME: person.name, COUNTRY: person.country };

return btoa(handleBars(perUser));

}

export default async function (req: Request) {

const url = new URL(req.url);

const country = url.searchParams.get('country') ?? undefined;

const response = (await Person.findMany({ country })).map((x) => {

return { id: x.id, base64: getFileContents(x) };

});

return responseFromJson(response);

}

And the result:

[

{

"id": "931eda2d-b2e0-456b-ba17-faa484101ff7",

"base64": "CmShbWU6IEdsYXViZXIKY291bnRyeTogQ2FuYWRhCg=="

},

{

"id": "aa786905-0db7-40c7-a90f-1fd2b8007597",

"base64": "Cm5hbWU6IERlamFuCmNvdW50cnk6IENhbmFkYQo="

}

]

Those examples are all of course very simple. But we can imagine more complex use cases and see their advantage. Although there will always be better and worse ways to write any code, notice how, you, the user, didn't have to worry about where to store this data, in which kind of database technology, and which queries to execute.

There's much more to ChiselStrike, which we will explore in the coming months. If you want to see more, you can also check our YouTube demo here. But for now, let's celebrate code and data living together once more, and hope that we all get to be friends again!